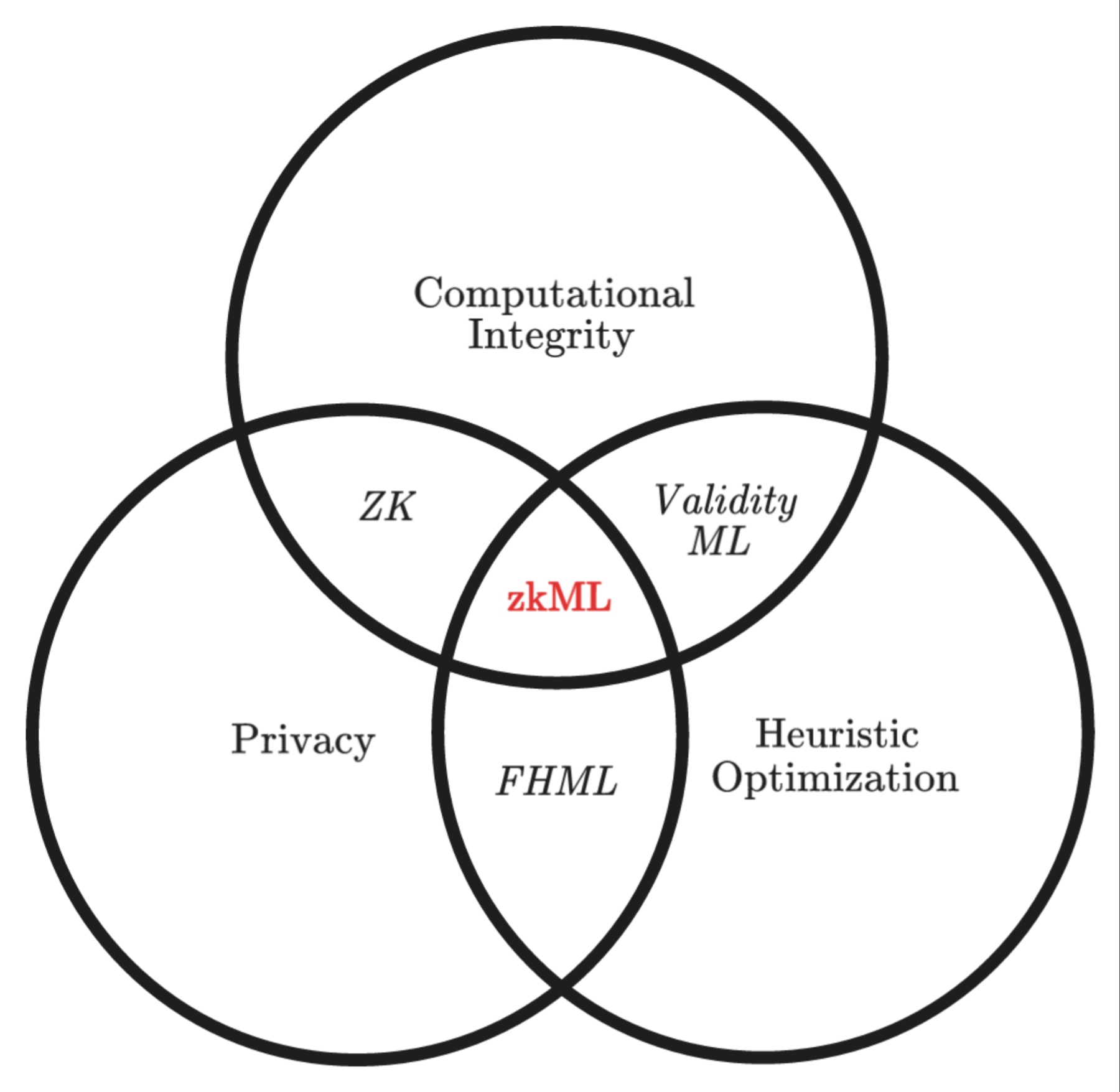

zkML: The unlikely union between zk Proofs and ML

zkML and its significance in the context of privacy-preserving machine learning

In the realm of artificial intelligence, machine learning has emerged as a transformative force, revolutionizing industries and reshaping our daily lives. However, this powerful technology is not without its challenges, particularly in the domain of data privacy. As machine learning algorithms are trained on vast amounts of personal data, concerns about data breaches, unauthorized access, and the potential for misuse have become increasingly prevalent.

Zero-knowledge machine learning (zkML) emerges as a promising solution to these privacy concerns. zkML leverages the power of cryptography to enable machine learning tasks without revealing the underlying data. This breakthrough technology allows for the development of secure and privacy-preserving machine learning models, enabling us to harness the insights from data without compromising individual privacy.

To fully grasp the significance of zkML, let's first understand the limitations of traditional machine-learning approaches. Traditional machine learning algorithms rely on direct access to sensitive data, such as medical records, financial transactions, or social media interactions. This data is often stored centrally in databases or cloud servers, making it vulnerable to cyberattacks and data leaks. Moreover, the process of training and evaluating machine learning models often involves sharing sensitive data with third parties, raising concerns about unauthorized access and data misuse.

zkML addresses these privacy concerns by employing cryptographic techniques to ensure that machine learning computations can be performed without revealing the underlying data. This is achieved through the use of zero-knowledge proofs (ZKPs), ingenious cryptographic tools that allow one party to prove to another that they possess certain knowledge without actually revealing that knowledge itself.

Imagine a scenario where a healthcare provider wants to train a machine learning model to predict the risk of heart disease for its patients. Using zkML, the provider can keep patient data confidential while still allowing the model to learn from the data and make accurate predictions. This is achieved by encrypting the patient data and performing all computations on the encrypted data. The model never sees the unencrypted data, and the provider can still verify that the model is making accurate predictions without revealing any patient information.

zkML holds immense potential to revolutionize various industries by enabling privacy-preserving machine learning applications. In the healthcare sector, zkML can facilitate secure medical research and personalized treatment plans without compromising patient privacy. In the financial domain, zkML can enable fraud detection, risk assessment, and personalized financial services without exposing sensitive financial data. In the social media realm, zkML can empower users to control their data and privacy while still enjoying personalized recommendations and targeted advertising.

As zkML technology continues to mature, we can expect to see a growing number of real-world applications that harness its power to safeguard individual privacy while enabling the benefits of machine learning. zkML represents a critical step towards a future where we can leverage the power of data-driven insights without compromising the fundamental right to privacy.

Challenges and limitations of traditional machine learning approaches in preserving data privacy

As machine learning has emerged as a transformative force, revolutionizing industries and reshaping our daily lives, concerns about data privacy have become increasingly prevalent. Traditional machine learning approaches pose significant challenges in preserving data privacy, raising concerns about data breaches, unauthorized access, and the potential for misuse.

Data Leakage and Breaches: Traditional machine learning models are trained on vast amounts of personal data, often stored centrally in databases or cloud servers. This centralization makes the data vulnerable to cyberattacks and data breaches. In the event of a breach, sensitive information such as medical records, financial transactions, or social media interactions could be exposed, compromising individuals' privacy and potentially leading to identity theft, financial fraud, or other harm.

Unauthorized Access and Misuse: The process of training and evaluating machine learning models often involves sharing sensitive data with third parties. This sharing of data increases the risk of unauthorized access, as data may be intercepted or accessed by unauthorized individuals during transmission or while stored on third-party servers. Moreover, even when data is shared securely, there is a risk of misuse. Third parties may use the data for purposes beyond the originally intended scope, potentially leading to discriminatory practices, unfair profiling, or other forms of privacy violations.

Limited Control over Data: Traditional machine learning approaches often give individuals limited control over their data. Once individuals' data is collected and used for training machine learning models, they may have little say in how their data is processed, stored, or shared. This lack of control can lead to individuals feeling powerless over their privacy and unable to protect their sensitive information.

Re-identification Attacks: Even when data is anonymized or aggregated, there is a risk of re-identification attacks. By linking anonymized data with external information, such as publicly available datasets or social media profiles, attackers may be able to re-identify individuals, potentially exposing their sensitive information.

Transparency and Auditability: Traditional machine learning models are often complex and opaque, making it difficult to understand how they make decisions. This lack of transparency can hinder individuals' ability to trust these models and make informed decisions about their data. Moreover, the lack of audibility makes it challenging to identify and address potential biases or errors in machine learning models.

These challenges highlight the need for privacy-preserving machine learning approaches that address the limitations of traditional methods. Zero-knowledge machine learning (zkML) emerges as a promising solution, enabling machine learning computations without revealing the underlying data, thus safeguarding individual privacy while harnessing the power of data-driven insights.

Zero-knowledge proofs (ZKPs) and their role in enabling zkML

In the realm of cryptography, zero-knowledge proofs (ZKPs) have emerged as ingenious tools that enable one party to prove to another that they possess certain knowledge without actually revealing that knowledge itself. This remarkable property has opened up a world of possibilities in various applications, including zkML, where ZKPs play a pivotal role in safeguarding data privacy while enabling the benefits of machine learning.

To grasp the essence of ZKPs, imagine a scenario where Alice wants to convince Bob that she knows the password to a secret vault without actually revealing the password itself. Alice could use a ZKP to demonstrate her knowledge without compromising the secrecy of the password. This is achieved through an intricate cryptographic protocol involving Alice and Bob.

In the zkML context, ZKPs serve as the cornerstone for enabling privacy-preserving machine learning computations. ZKPs allow machine learning models to perform computations on encrypted data without ever decrypting the data. This ensures that the sensitive data remains confidential while still allowing the model to extract valuable insights.

Consider a scenario where a healthcare provider wants to train a machine learning model to predict the risk of heart disease for its patients using their medical records. Without ZKPs, the provider would need to share the unencrypted medical records with the model, raising privacy concerns. However, with ZKPs, the provider can encrypt the medical records and apply the model to the encrypted data. The model can then perform the necessary computations and make predictions without ever seeing the unencrypted data, preserving patient privacy.

ZKPs offer a range of advantages in enabling zkML:

1. Privacy Preservation: ZKPs ensure that sensitive data remains confidential throughout the machine learning process, preventing unauthorized access and misuse.

2. Data Security: ZKPs protect data from breaches and attacks, as the underlying data remains encrypted even during computations.

3. Enhanced Trust: ZKPs increase transparency and auditability in machine learning models, fostering trust among individuals and promoting responsible data practices.

4. Decentralization: ZKPs enable decentralized machine learning applications, where data can be processed and analyzed without the need for centralized servers.

5. Scalability:ZKPs are becoming increasingly efficient, paving the way for practical applications in large-scale machine learning tasks.

The emergence of zkML, powered by ZKPs, marks a significant step towards a future where we can harness the power of data-driven insights without compromising individual privacy. ZKPs hold immense potential to revolutionize various industries, from healthcare to finance to social media, by enabling privacy-preserving machine learning applications that safeguard sensitive data while unlocking transformative insights.

Foundations of zkML

Fundamental concepts of zkML

1. Privacy-Preserving Model Training

Model training, the process of building a machine learning model from a dataset, often involves sensitive data, raising privacy concerns. Traditional training methods require unencrypted data to be shared with the model, making it vulnerable to breaches and unauthorized access. zkML tackles this challenge by enabling privacy-preserving model training, where the data remains encrypted throughout the training process.

In zkML-based model training, the data is encrypted using secure cryptographic techniques, such as homomorphic encryption or secure multi-party computation (SMC). The encrypted data is then fed into the machine learning model, which performs its computations on the encrypted data without ever decrypting it. The model's weights and parameters are also encrypted, ensuring that the training process does not reveal any sensitive information.

2. Privacy-Preserving Inference

Inference, the process of using a trained machine learning model to make predictions on new data, also poses privacy risks. Traditional inference methods require the input data to be decrypted before being fed into the model. zkML addresses this challenge by enabling privacy-preserving inference, where the input data remains encrypted throughout the inference process.

In zkML-based inference, the encrypted input data is fed into the model, which performs its computations on the encrypted data without decrypting it. The model's output, which is the prediction, is also encrypted. This ensures that neither the input data nor the prediction is exposed in its unencrypted form, safeguarding individual privacy.

3. Verification of Model Accuracy and Predictions

A critical aspect of machine learning is verifying the accuracy of the model and its predictions. However, traditional verification methods often require access to the training data or ground truth labels, which can compromise privacy. zkML addresses this challenge by enabling verification without revealing sensitive information.

In zkML-based verification, ZKPs are used to prove that the model has been trained correctly on the data without revealing the data itself. Similarly, ZKPs can be used to prove that the model's predictions are accurate without revealing the ground truth labels. This enables users to trust the model's performance without compromising their privacy.

Different types of zkML protocols

1. Homomorphic Encryption-Based zkML

In homomorphic encryption-based zkML, data is encrypted using homomorphic encryption, a cryptographic technique that allows computations to be performed on encrypted data without decrypting it. This enables machine learning models to operate on encrypted data, preserving privacy while still extracting valuable insights.

Homomorphic encryption schemes provide two key properties:

- Homomorphism: Operations performed on encrypted data correspond to the same operations performed on unencrypted data.

- Ciphertext Unverifiability: The encrypted data does not reveal any information about the unencrypted data.

These properties enable homomorphic encryption-based zkML to achieve privacy-preserving model training, inference, and verification. The model can perform computations on encrypted data without decrypting it, and the results can be verified without revealing the underlying data.

2. Secure Multi-Party Computation (SMC)-Based zkML

In secure multi-party computation (SMC)-based zkML, multiple parties can collaborate on machine learning tasks without revealing their private data to each other. This is achieved through cryptographic protocols that ensure that each party's data remains confidential while still allowing for the necessary computations to be performed.

SMC protocols provide two key features:

- Privacy Preservation: Each party's data remains confidential, and no party can access the data of other parties.

- Correctness: The computations performed on the joint data are guaranteed to be correct.

These features enable SMC-based zkML to achieve privacy-preserving model training, inference, and verification. Multiple parties can collaborate on training a model.

Cryptographic tools and techniques used in zkML

1. Garbled Circuits

Garbled circuits are a cryptographic technique that allows for the secure evaluation of Boolean circuits. A Boolean circuit is a mathematical representation of a boolean function, which is a function that takes a set of binary inputs and produces a binary output. Garbled circuits are constructed by encrypting each gate of the Boolean circuit using homomorphic encryption. This encryption ensures that the circuit's logic remains hidden, and only the authorized parties can evaluate the circuit.

In zkML, garbled circuits are used to perform privacy-preserving machine-learning computations. For example, a garbled circuit can be used to implement a machine learning model, and the model can be evaluated on encrypted data without revealing the data or the model's parameters.

2. Zero-Sum Range Proofs

Zero-sum range proofs are a type of zero-knowledge proof (ZKP) that allows one party to prove to another that they know a value that falls within a specific range without revealing the value itself. ZKPs are cryptographic tools that enable one party to prove to another that they possess certain knowledge without actually revealing that knowledge itself. Zero-sum range proofs are particularly useful in zkML because they allow for the verification of model accuracy and predictions without revealing the underlying data or labels.

In zkML, zero-sum range proofs can be used to verify that a machine learning model has been trained correctly on the data without revealing the data itself. Additionally, zero-sum range proofs can be used to verify that the model's predictions are accurate without revealing the ground truth labels.

Applications of zkML

Healthcare

The healthcare industry is a treasure trove of sensitive personal data, ranging from medical records to genetic information. zkML has the potential to revolutionize healthcare by enabling secure and privacy-preserving medical research, personalized treatment plans, and secure sharing of medical data among healthcare providers.

● Private Medical Research: zkML can empower researchers to conduct medical studies and analyze sensitive data without compromising patient privacy. By enabling computations on encrypted data, researchers can extract valuable insights from medical records without revealing the identities of individual patients.

● Personalized Treatment Plans: zkML can facilitate the development of personalized treatment plans tailored to each patient's unique medical history and genetic profile. By enabling secure analysis of patient data, healthcare providers can make informed treatment decisions while safeguarding patient privacy.

● Secure Data Sharing: zkML can enable secure sharing of medical data among healthcare providers, facilitating collaborative care and improving patient outcomes. By ensuring that only authorized parties can access and analyze patient data, zkML can prevent unauthorized access and data breaches.

Finance

The financial sector handles vast amounts of sensitive financial data, including transaction records, credit scores, and investment portfolios. zkML can transform the financial industry by enabling secure and privacy-preserving fraud detection, risk assessment, and personalized financial services.

● Private Fraud Detection: zkML can empower financial institutions to detect fraudulent activities without exposing sensitive financial data. By analyzing encrypted transaction patterns, fraud detection systems can identify anomalies and potential scams without compromising customer privacy.

● Privacy-Preserving Risk Assessment: zkML can facilitate privacy-preserving risk assessment for creditworthiness and insurance underwriting. By enabling computations on encrypted financial data, risk assessment models can provide accurate evaluations without revealing individual financial details.

● Personalized Financial Services: zkML can enable the development of personalized financial services tailored to each customer's unique financial situation and risk profile. By analyzing encrypted financial data, financial institutions can offer customized recommendations and investment strategies while safeguarding customer privacy.

Social Media

Social media platforms collect and store vast amounts of personal data, including user profiles, social interactions, and online behaviour. zkML can empower social media users to control their privacy and benefit from personalized recommendations and targeted advertising without compromising their sensitive information.

● User-Controlled Privacy: zkML can enable social media users to selectively share their data with specific applications or services while maintaining control over their privacy settings. By allowing users to encrypt their data and control its access, zkML can empower individuals to manage their online privacy.

● Privacy-Preserving Recommendations: zkML can facilitate the development of privacy-preserving recommendation systems that provide personalized suggestions and targeted advertising without revealing sensitive user data. By analyzing encrypted user-profiles and activity patterns, recommendation systems can offer relevant recommendations without compromising user privacy.

● Secure Data Analysis: zkML can enable social media platforms to analyze user data for research and social insights without revealing individual identities or sensitive information. By performing computations on encrypted data, social scientists can gain valuable insights into social trends and behaviours while safeguarding user privacy.

These examples illustrate the transformative potential of zkML across various domains. By enabling privacy-preserving data analysis and secure collaboration, zkML can revolutionize industries, empower individuals, and usher in a new era of data-driven insights without compromising privacy.

Challenges and limitations of implementing zkML

Despite its immense potential to revolutionize various industries, zero-knowledge machine learning (zkML) faces several challenges and limitations in real-world applications. These challenges stem from the inherent complexity of zkML protocols, computational efficiency considerations, and the need for standardized implementations.

1. Computational Efficiency: zkML protocols often involve intensive computations, which can significantly increase the time and computational resources required to perform machine learning tasks. This computational overhead can pose a challenge for real-time applications or large-scale data analysis.

2. Standardization and Interoperability: The lack of standardized zkML protocols and frameworks can hinder interoperability and adoption. Different zkML implementations may have varying levels of performance, security, and compatibility, making it difficult for developers to choose and integrate appropriate tools into their applications.

3. Privacy-Preserving Model Updates: Updating machine learning models in a privacy-preserving manner remains an active area of research. While zkML can enable secure training and inference, updating models with new data without revealing sensitive information presents a significant challenge.

4. Integration with Existing Systems: Integrating zkML into existing machine learning workflows and systems can be complex and require significant modifications. Existing data pipelines, training procedures, and prediction services may need to be adapted to accommodate zkML protocols.

5. Balancing Privacy and Utility: Achieving a balance between privacy and utility is crucial for zkML applications. While zkML protects sensitive data, it may also introduce trade-offs in terms of model accuracy or the granularity of insights that can be extracted from the data.

6. User Adoption and Education: Widespread adoption of zkML requires user education and trust in zkML protocols. Users need to understand the benefits and limitations of zkML and be confident that their data is protected.

Despite these challenges, ongoing research and development efforts are actively addressing these limitations. The development of more efficient zkML protocols, the standardization of zkML frameworks, and the integration of zkML with existing systems will pave the way for broader adoption and real-world applications of zkML.

Conclusion

As we conclude our exploration of zero-knowledge machine learning (zkML), it is essential to recap the key takeaways that highlight the significance and potential of this transformative technology. zkML emerges as a beacon of hope in the realm of privacy-preserving machine learning, addressing the critical challenge of safeguarding sensitive data while harnessing the power of data-driven insights.

At the heart of zkML lies the concept of zero-knowledge proofs (ZKPs), ingenious cryptographic tools that empower one party to prove to another that they possess certain knowledge without revealing that knowledge itself. This remarkable property has revolutionized the way we approach data privacy, enabling zkML to unlock a world of possibilities in various applications.

zkML tackles the fundamental challenge of privacy preservation in machine learning by enabling secure and private data analysis. By performing computations on encrypted data, zkML ensures that sensitive information remains confidential while still allowing for the extraction of valuable insights. This breakthrough empowers organizations to leverage data-driven decision-making without compromising individual privacy.

The potential applications of zkML are vast and multifaceted, spanning across various industries. In the healthcare sector, zkML can facilitate secure medical research, personalized treatment plans, and secure data sharing among healthcare providers. In the financial domain, zkML can enable fraud detection, risk assessment, and personalized financial services without exposing sensitive financial data. Social media platforms can also harness zkML to empower users with control over their privacy while still benefiting from personalized recommendations and targeted advertising.

Despite the challenges and limitations that zkML faces in real-world implementation, the ongoing research and development efforts are rapidly addressing these obstacles. The development of more efficient zkML protocols, the standardization of zkML frameworks, and the integration of zkML with existing systems will pave the way for broader adoption and real-world applications of zkML.

As zkML continues to mature, we can anticipate a future where privacy-preserving machine learning becomes an integral part of our daily lives. zkML holds immense potential to transform industries, empower individuals, and usher in a new era of data-driven insights that respects and safeguards the fundamental right to privacy.